TAM is defined as the revenue a company could realized while having 100 per cent market share while creating shareholder value. The ability to calibrate the total addressable market (TAM) is a major part of anticipating value creation.

This report provides a framework for estimating TAM through the process of triangulation. Three methods are used – first is based on population, product and conversion. Next diffusion model is analyzed in which addresses absolute size of market and also rate of adoption. Finally, base rate check is done for reality check.

Categorizing New Products

To assess market size categorization of product is a logical starting point. This allows us to appraise a company’s strategy to promote the product. Companies can influence their TAM through pricing strategy and product enhancement. Pricing strategy is when companies sell products at discount in order to gain market share. Product enhancement can reflect improvement in the product itself.

Current technologies that have applications for new customers and new technologies used by current customers are the categories where a TAM analysis is most relevant. TAM is tricky to analyze when both technology and customers are new.

Some specific ways to estimate TAM –

Market Size – Population, Product and Conversion

First approach is to estimate absolute market size, in which number of potential customers is multiplied with expected revenue per customer. There are three parts for this analysis – first is population in which we estimate the population which can use our product, second is product in which we estimate population which is likely to use our product and the last is conversion where we analyze what can be the potential revenue. Factors that shape up demand and supply can be considered for doing more in – depth analysis of absolute market size.

Factors to consider in assessing demand are financial resources, physical limitations, elasticity of demand, cyclicality, substitution and substitution threats.

Factors to consider in assessing supply are ability to supply, unit growth and pricing, regulatory constraints, incentives, scale, niches.

TAM and the Bass Model

The Bass model allows for a prediction of the purchasers in a period, say for each year, as well as a total number of purchasers. Bass model relies on three parameters –

- The coefficient of innovation (p) – This captures mass-market influence

- The coefficient of imitation (q) – This reflects interpersonal influence.

- An estimate of the number of eventual adopters (m) – A parameter that determines the size of the market.

The equation for the Bass model is – N(t) – N(t−1) = [p + qN(t−1)/m] x

This formula simply says that new adopter’s equal the adoption rate multiply the number of potential new adopters.

N(t) – N(t−1) shows the number of adopters during a period i.e. simply the difference between the users now, N(t), and the users in the prior period, N(t−1). First term on the right side of the equation spells out the rate of adoption. The second term on the right side is the number of users who have not yet adopted the product.

Investor application of the Bass model –

The first is to estimate product potential based on the parameters from historical diffusions. Second way to use the model is to start with a company’s stock price and backwards.

Bass model also allows solving for the size of peak sales. For calculating the size of peak sales this equation can be used –

Size of peak sales = m[(p+q)2 / 4q].

There are typically three stages in industry evolution. During the first stage, the number of competitors grows. In second stage there are large shakeouts as the result of higher number of firms exiting. In third stage, number of competitors and market shares stabilizes. In stage two company’s sales can grow faster as the numbers of competitors are reducing.

Limitations for Bass model –

This model can be helpful in judging TAM. But there are some limitations that don’t allow it to capture certain considerations.

First limitation is of replacement cycle. Bass model is used primarily for forecasting adoption of a product. But very less attention is given to replacement cycle. Replacement cycle talks about replacement of a product once the purchase is done.

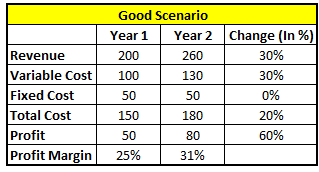

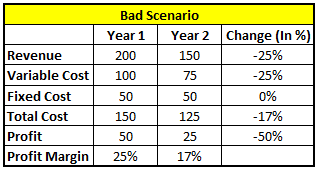

Second limitation is of economies of scale. Economies of scale is when company’s fixed cost gets spread over higher sales i.e. when company do more sales then the fixed cost spreads over a larger base. This limits the level of TAM because companies fail to create value after a certain size. There is a separate issue with similar implications. This is when companies overshoot their markets. Two symptoms of overshot market are customers use only a fraction of the functionally the product offers and they are not paying for new features.

Third limitation is of network effect. Network effect is there when value of one product increases when more people start using it. Example – telephone. If only one person is using then it has very little value. But as more number of people starts using it the value increases. In businesses where strong network effect is there, market shares of companies are higher. Companies spend heavily in the hope that their product will become the product of choice but in sectors with strong network effects, most companies fail to go from early adopters to mainstream users.

Base rates as a reality check –

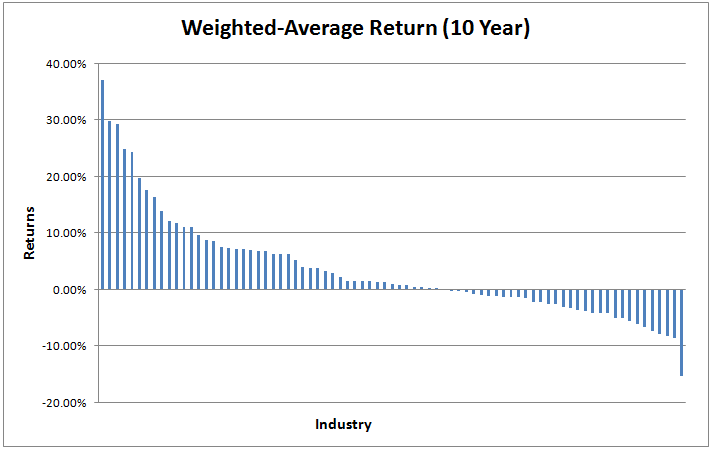

Third method in the triangulation process to estimate TAM is careful consideration of base rates. The main idea is to refer to what happened to other companies when they were in the same stage. This can be useful because, say for example, a company’s management is saying that they can grow at 10% CAGR in the coming 5 years. But in base rate method we check that what happened to other companies when they were in the same stage. This can be a reality check. Base rates provide a check on the output of the first two approaches to estimating TAM.

TAM and Ecosystems

Generally business can be in three categories – Physical, Service and Knowledge. Main objective is to understand the characteristics of these categories and to consider how companies can expand across them.

Physical – Main source of cash flow for these businesses is tangible assets like manufacturing facilities, stores and inventory etc. Sales growth is tied to asset growth.

Service – Main source of competitive advantage is people for service businesses.

Knowledge – These businesses also rely on people as their main source of competitive advantage.

These categories differ in economic characteristics. Some considerations are as –

Source of advantage – Physical companies depend on tangible assets while service and Knowledge business depend on people.

Investment trigger – Physical and service business can grow by adding capacity either in the form of CAPEX or new employees. Knowledge businesses generally invest to deal with obsolescence.

Products and Protecting Capital – Rival goods are those where consumption of one’s product will decrease the consumption of others. Non-rival goods are generally difficult to protect because they are relatively easy and cheap to replicate. Creator of a non-rival good often has difficulty capturing the value. One strategy companies use to increase TAM is to extend business in new business categories. This extension can have challenges such as trade-offs between open and closed systems, functionality in the product or in the cloud, determining which party owns the data, and whether or not to monetize data by selling to outside parties.